Opinion: San Francisco’s “killer robots” and surveillance are just the latest in a technology-fueled police power trip

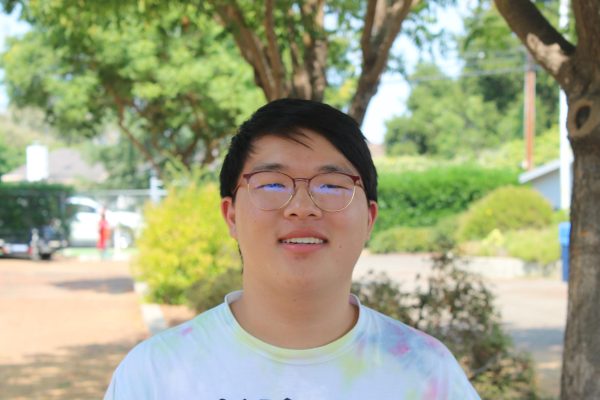

A bomb disposal robot used by the Cleveland Police. The San Francisco Police Department wants the ability to deploy lethal force using robots like these — a sign of our increasingly cutting-edge, power-hungry policing system.

Imagine turning on the TV to watch the daily news and seeing someone being slaughtered by a police robot. Imagine the police gaining access to private surveillance cameras in real time, without proof of a crime. Sound like a dystopian future? Well, it could very soon be our reality.

On Tuesday, November 29, the San Francisco Board of Supervisors gave preliminary approval to the San Francisco Police Department (SFPD) to give its robots the license to use deadly force. Per this proposed policy, robots will be allowed to detonate bombs if they believe there’s an imminent threat to the lives of police or the public. And, this past September, the Board of Supervisors approved a measure allowing the SFPD to monitor footage from private security cameras, with the ability to view live footage under a variety of circumstances with the owners’ consent.

Put simply, the SFPD wants the power to kill using robots. They want the ability to create a citywide network of near-constant surveillance. And they want the ability to do that everywhere.

In both proposals, the language approved is exceedingly vague. Police can deploy these killer robots in any “exigent circumstances,” and can turn on their surveillance system during “events with public safety concerns” — both of which are broad enough to refer to anything from legitimate criminality to peaceful protests.

While many, including the SFPD and its defenders, have argued that these measures are necessary to surveil and fight crime effectively, their stated purpose is far broader than simply fighting crime. These measures, taken as a whole, effectively allow the SFPD to create something of a technologically-advanced surveillance state, where police can monitor every corner of their city and even remotely kill suspects of crimes with robots that they can deploy anywhere.

But this isn’t just the case in San Francisco. There’s a reason why stories like this one are increasingly sounding like science fiction movies: The police have been harnessing increasingly militant technology to increase their power, often at the expense of privacy and civil rights.

You’ve probably seen pictures of policemen armed with military surplus weapons, driving in tanks and armored vehicles — the product of a military program that grants leftover military equipment to police departments free of charge. But San Francisco’s recent schemes, and ones like it, don’t simply use leftover equipment to militarize their police departments. They push the boundaries, seeking to gain undue amounts of power by harnessing cutting-edge technology.

This isn’t a phenomenon unique to Silicon Valley. In 2020, United States Customs and Border Protection (CBP), a federal law enforcement agency, flew a Predator drone — commonly used by the Army to monitor and kill terrorists — over George Floyd protests in Minneapolis, Minn. Not only did CBP use military drones to surveil protestors, they directly invaded the privacies of indigenous activists when they circled the same patrol drones over their houses. In Newark, N.J., a so-called Citizen Virtual Patrol invites anyone to tune into hundreds of cameras livestreaming the city — cameras largely concentrated in Black and Brown communities — and report any activity that looks suspicious. This use of often military-grade, 21st-century technology reinforces systemic racism, decreases trust in law enforcement and amounts to an egregious violation of privacy.

This isn’t to say that all advanced technology should be off limits for police: Devices like non-militarized drones and gunshot detection systems have been used to avert potential disasters. But this has gone beyond riding through a city with a tank to appear intimidating. Military drones, bomb-detonating robots and networks of surveillance cameras threaten to expand police power to unimaginable, horrifying levels.

The use of novel, militant and barely-tested technology also raises a whole host of privacy and ethics concerns. How secure is all of the data going to these departments? What stops police departments from singling out and harassing minority communities via drone? Who is to blame if a robot accidentally kills someone? And who’s to say that police will follow use-of-force guidelines with robots when they’ve consistently violated other regulations against violence?

In addition, the decisions of police departments like the SFPD to integrate dangerous technology into policing are likely to propagate to the rest of the country. Earlier this year, Oakland tried to implement similar rules allowing robots to use lethal force. In 2016, the Dallas Police Department used a robot to kill a police shooting suspect. And, if San Francisco successfully allows its police department to use its fleet of robots to kill, it’s likely that other police departments will do the same.

We must carefully consider how we allow this technology to circulate to our local police departments. Advocacy groups like the Electronic Frontier Foundation and American Civil Liberties Union effectively monitor police technology misuse, but we must also keep our own local police departments in check. If they gain the ability to use this kind of technology, there’s no guarantee that they’ll use it safely. Soon enough, we might start seeing killer robots at protests, or military drones monitoring activists, or livestreaming security cameras watching our every move.

Police are supposed to be protecting us, not violating our rights and enriching their military-like arsenals with unsound technology. If they continue choosing the latter, our country’s law enforcement will start to resemble a bad episode of “Black Mirror.”