What began as a shared passion for coding and app development turned into a groundbreaking academic milestone for brothers Kai Etkin and Hudson Etkin. The sophomore-senior duo recently published their research on AI’s impact on reading comprehension in a peer-reviewed journal, Frontiers in Education, and presented their findings at the Stanford Human-Centered Artificial Intelligence (HAI) AI + Education Summit in February.

The Etkin brothers’ journey into research began with childhood collaborations. Growing up in Silicon Valley, they developed an early interest in technology, especially app development.

“Coding has always been an accessible medium for us to create and innovate,” Kai said.

While their collaboration in creating apps peaked during quarantine, their research came together in late 2023 from their initial goal of creating an app that would make textbooks more engaging and easier to read. Around the same time, they noticed the rapid rise of AI tools in education.

“But there was very little research on its actual impact,” Kai said.

The brothers shifted from app development to academic research with a newfound question: How do AI tools impact reading comprehension?

“We specifically chose reading comprehension because it’s a very foundational skill,” Kai said.“Beginning of your education, you learn to read, and then the rest of your life you read to learn.”

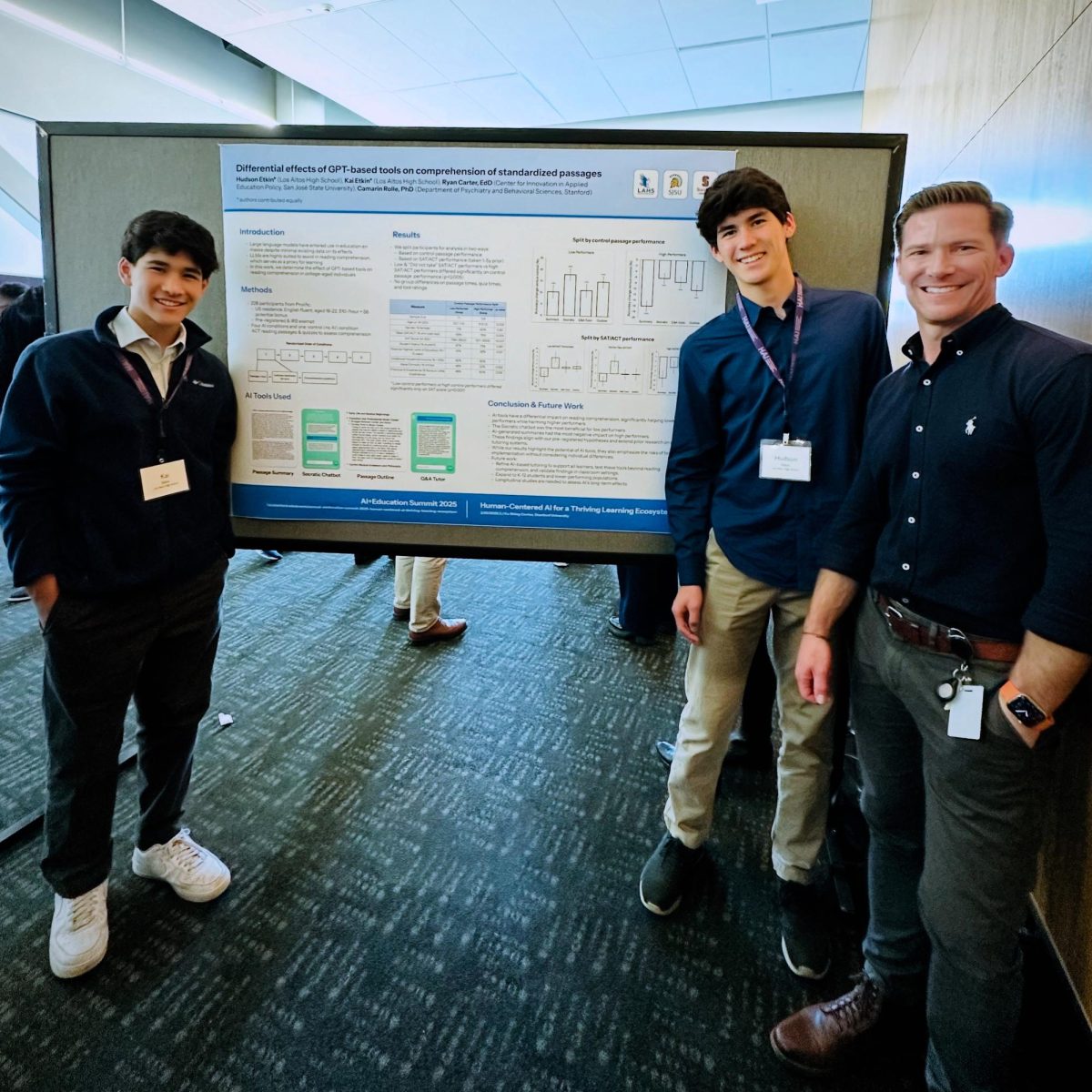

Under Los Altos High School Counselor Ryan Carter’s mentorship, Kai and Hudson designed a study using standardized passages, such as the ACT’s reading section. They spent the next year and a half rigorously analyzing and working through the research’s start to finish to create their manuscript: “Differential effects of GPT-based tools on comprehension of standardized passages.”

The brothers’ first step was creating four AI tools in six months: A socratic method chatbot, an open-ended Q&A tutor chatbot, an AI-generated summary tool, and an AI-generated passage outline tool. Official ACT reading test passages with corresponding multiple choice tests were used to test their AI models.

“We set up five conditions: Four AI tools and one control condition, without AI,” Kai said. “And for every single condition, participants would read a randomized passage and then take the test.”

The brothers gathered 230 online participants aged 18–22 from an online platform called Prolific to test their portal after obtaining an Institutional Review Board approval, a requirement for studies that involve human participants to ensure human rights compliance.

After collecting data for five to six months, the initial results disappointed Kai and Hudson.

“It was to some extent disheartening,” Kai said. “We expected these tools to help — that’s kind of what AI is able to do — but we saw essentially nothing. That was a huge challenge at first, but then we looked deeper into the data.”

When one group of participants that scored well on the control condition used AI, their test scores went down. When a group of generally poor-performing participants who scored low on the control condition used AI, their test scores went up. Overall, the scores with AI across all the participants naturally balanced out, forming what they call a differential effect.

“It was tools that we thought worked, that we spent a lot of time developing,” Hudson said. “But it took a mindset shift of being an app developer, where you build something, to being a researcher, where you’re seeking the truth. What came out of those initially disheartening results was actually much more significant for our paper and field.”

After around two months of writing their research paper and going through the lengthy, seven month peer review process to publish their paper in the journal, Kai and Hudson finally saw tangible recognition for their work in February. The Etkins were invited by The Center for Innovation in Applied Education Policy at San Jose State University to speak in a webinar: ‘Becoming High School Student Researchers in the Age of AI: Insights From a Student-Led Study of Improving Reading with Chatbots.’ The same month, they were also invited to present a poster at the Stanford HAI AI + Education Summit, an annual conference with leading discoveries on AI in education.

“It’s intimidating to be in the same room with all these accomplished people, but Kai and Hudson are just very self-directed and great innovators,” Carter said. “They walked out of that conference with a lot of interest in their work and potential future collaborations.”

“We were the only high schoolers there, and everybody there were established researchers,” Hudson said. “Having that paper was an armor that helped us feel credible.”

With a topic as compelling and current as AI, Kai and Hudson’s findings suggest that AI tools should not be applied universally in education, and should be based on specific student needs.

“They were, in some ways, exploring how AI tools could actually be used as equity tools to help level the playing field for students,” Carter said.